Credit: Apple

Follow Lifehacker’s ongoing coverage of WWDC 2024.

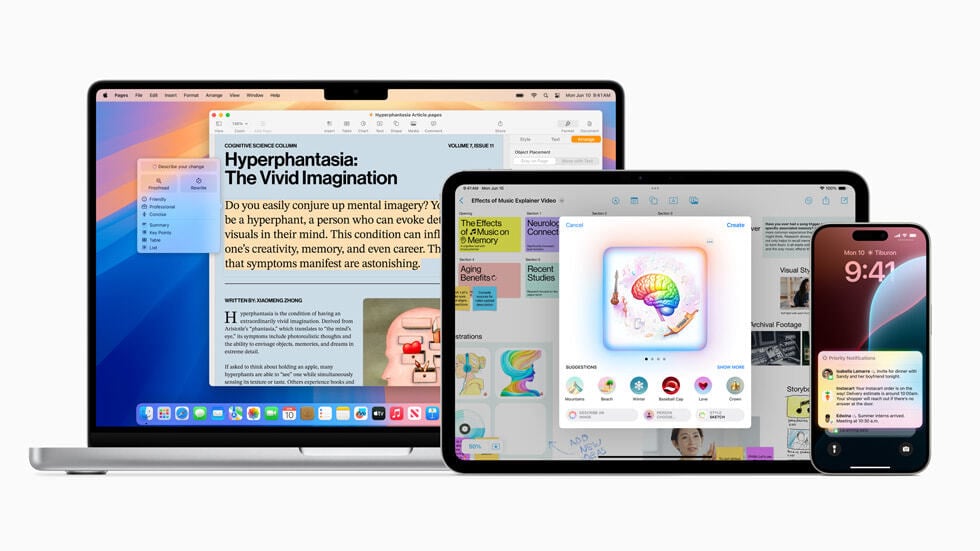

Apple is finally ready for its AI moment, after years of speculation and four generations of devices with almost unused neural engines inside. At its 2024 WWDC conference, the company formally announced Apple Intelligence (yes, pun intended), set to release in beta for iPhone, iPad and Mac this fall.

It’ll be interesting to see how Apple interacts with such a nascent technology. As with Apple Vision Pro, the company usually prefers to wait on trends until it can release a refined, largely frictionless take on them. AI, meanwhile, still frequently “gets it wrong,” as Google learned with its AI Overviews feature earlier this month.

Nevertheless, Apple is going full steam ahead with AI in messages, mail, notifications, writing, images, and perhaps most intriguingly, Siri. The company promised it can maintain its reputation for polish, too, with a greater focus on privacy and on-device processing than the competition.

Details on how exactly Apple’s AI works are light, but overall, the company’s promising to do more than Google, Rabbit, or pretty much any competition has done so far. Let’s break it down.

AI in Siri

Credit: Apple

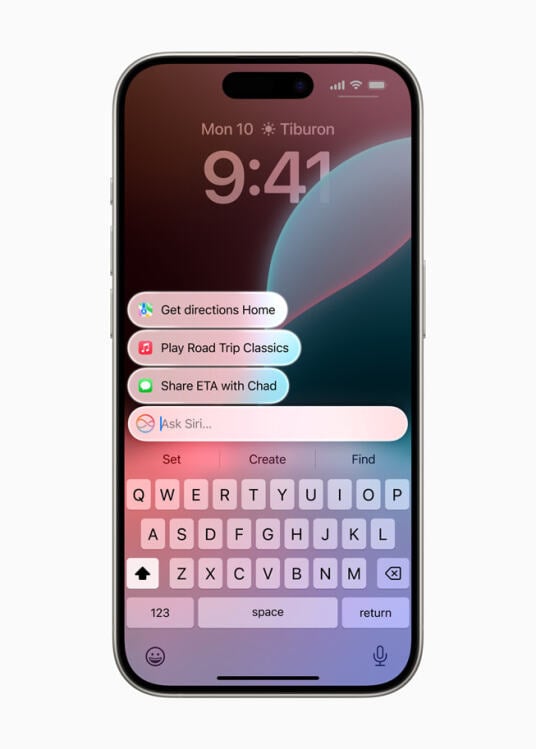

Perhaps the most innovative Apple Intelligence feature is Siri, which is getting a full makeover complete with a new logo.

It’s a moment that’s been a long-time coming: Since Siri introduced the world to the digital assistant in 2011, it’s been overtaken by competitors like

Google Assistant and Alexa in many other respects

. Now, Apple is doubling down on Siri, fully revamping it with AI even as Google inches towards replacing Google Assistant with Gemini. The result? A much more natural AI assistant than on Android.

Right now, on Android, replacing Assistant with Gemini will just take you to a shortcut for the web app. Unlike its “dumber” predecessor, Gemini can’t set reminders, adjust phone settings, or open apps, meaning its promises of more functionality actually come with less functionality.

That’s not supposed to be the case with the new Siri, which will maintain all its “dumb” features, but come with new contextual awareness. Now, when you open Siri, it’ll take a look at what’s on your screen, and will be able to offer advice based on what’s displayed. You could be looking at the Wikipedia page for Mount Rushmore, for instance, and ask, “What’s the weather here?” to get Siri to tell you a forecast for your trip.

Contextual awareness isn’t limited to what you have pulled up in the moment, either. Apple says Siri will also be able to search your libraries and apps to take “hundreds of new actions,” even in third-party programs. Say you save this article to your reading list right now. When Apple Intelligence comes to your iPhone, you could ask Siri to, “Bring up the Lifehacker article about WWDC from my reading list” to access it again.

Credit: Apple

Or, more personally, say you’re texting a friend about a podcast. With the new Siri, you could just ask, “Play that podcast Dave recommended this weekend,” and Siri will know what you’re talking about and pull it up.

The implications here are big, both for usefulness and privacy. Overall, promised contextual features include:

-

Contextual answers for questions

-

Contextual search in photos and videos (for example, you could ask Siri to bring up all photos of you wearing a red shirt)

-

Ability to take contextual actions for you, like adding an on-screen address to a contact card or applying auto-enhance touch-ups to photos for you

But Siri’s also hoping to bring an Apple Genius into your home, as Siri is coming preloaded with tutorials on how to use your iPhone, iPad, or Mac. Just ask the assistant, “How to turn on dark mode” or, “How to schedule an email,” and Siri will reference its training material and feed you an answer though an on-screen notification, rather than sending you to a help page. (We’ll still be here for all your tech advice needs.)

Credit: Apple

One of Siri’s more traditional, prompt-based features is the ability to create custom, AI-powered video montages. Right now, Apple’s Memory Collages just automatically generate in the background, algorithmically tying together photos the OS thinks are related and setting them to background music the software thinks will fit. Soon, you’ll be able to give Siri specific directions, referencing contacts, an activity or place, and a style of music. Siri will then contextually generate a fitting montage, with music pulled from Apple Music.

There’s also typical AI chatbot features, like the ability to ask questions. Oddly, Apple wasn’t clear on whether Siri will be able to answer questions directly (at least those not related to Apple devices), but the company has a back-up: Through Siri, you can ask ChatGPT your questions.

Because Apple’s privacy settings differ from ChatGPT’s (more on that later), Siri will prompt you to give it permission for ChatGPT each time you use it. Then, the assistant will ask your question for you, no account required. Like DuckDuckGo, Apple will also hide your IP address when using ChatGPT for you, and the company promises OpenAI will not log your requests. ChatGPT subscribers can also link their accounts to Siri for access to paid features, although Apple does warn that free users will face the typical data use limitations.

Siri’s AI features will be usable across iPhone, iPad, and Mac, and present what looks like a more natural AI-powered assistant than Google’s approach of starting over with Gemini. That said, if it seemed like it’s still limited compared to what other LLM chatbots can do, that’s because Apple Intelligence is much bigger than Siri.

Apple Intelligence is going above and beyond the Google Pixel

A big part of Apple’s AI presentation this year seemed targeted at Pixel, specifically its AI “feature drops.” Until now, Pixel transcriptions and Magic Editor have been big exclusives for Google, but Apple Intelligence is finally giving its biggest competitor a shot in the same arena.

First, iOS, iPadOS, and MacOS devices are getting their own takes on Magic Eraser and Live Transcription. In the Photos app, users can tap a new Clean Up icon to circle or tap subjects they want cut out of a picture. Photos will remove the offending subject, then use generative AI to fill in where they were. It’s not quite on the level of Magic Editor, which allows you to move subjects around once selected, but Google is firmly on notice.

Credit: Apple

Similarly, the Notes app will be able to summarize and transcribe audio recordings for you, a boon for journalists like me. I’ve had colleagues choose the Pixel for its transcribing feature alone, and now I’ll finally be able to keep up on my iPhone. Even better—Notes will also be able to transcribe phone calls live.

That does present a legal issue, so actual usage is likely going to differ from state to state and from country to country, as recording laws differ depending on where you are. For now, Apple says the Phone app will warn you when a recording is about to start.

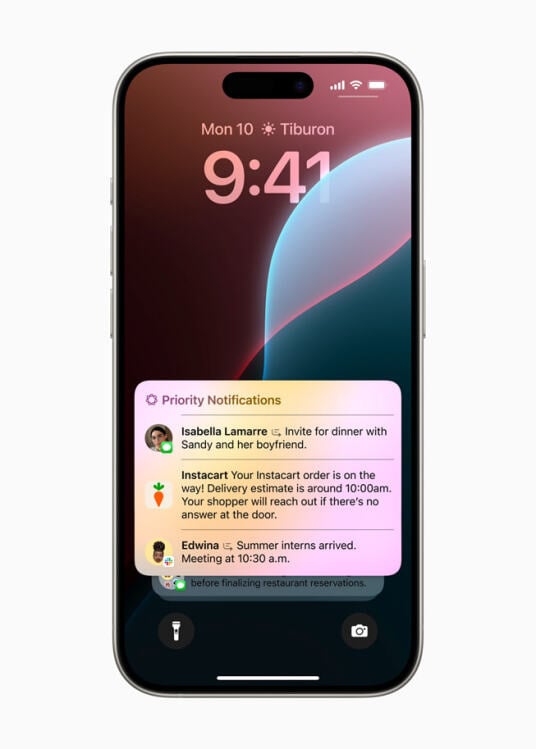

But beyond features that are similar to those on Google’s flagship, Apple’s also developing unique draws of its own. Here, the company is making it easier to manage your notifications and mail.

The standout features here are Priority Messages and Priority Notifications. With Priority Messages, Apple AI attempts to find “the most urgent emails” and push them to the top of your inbox. Priority Notifications takes a similar approach, but with lock-screen notifications from texts and apps.

Credit: Apple

With both of these, you’ll have the option to have the AI write you a summary of the mail or notification rather than previewing its content, helping you quickly browse your feed. In Mail, you’ll actually be able to get summaries across your whole inbox.

Apple pitches this as a great way to stay up-to-date with time sensitive information like boarding passes. Additionally, in Mail, you’ll be able to use Smart Reply to have AI quickly type out a reply for you based on the context of your email. You’ll also be able to get summaries across an entire conversation, not just for the first email.

With these updates, Apple is finally coming for Google’s software, hoping to dethrone the Pixel from being “the smartest smartphone.” But these innovations aren’t without risk. Take the Reduce Interruptions Focus mode, which will use AI to show “only the notifications that might need immediate attention, like a text about an early pickup from daycare.” Relying on Apple Intelligence for what to show you is putting a lot of trust in an untested model, although it’s promising for Apple that it has the confidence to push that kind of feature out at launch.

Apple can help you write and generate images

Speaking of risk, it’s time to talk about the bread and butter of AI: image and text generation.

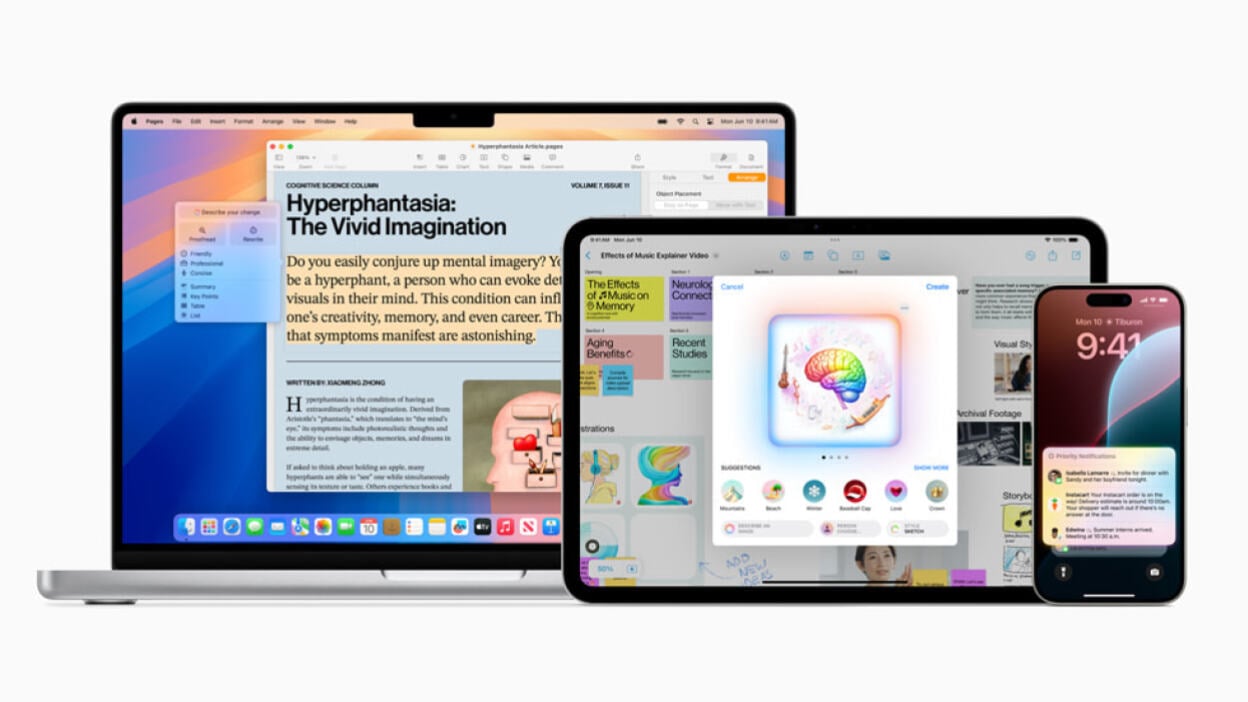

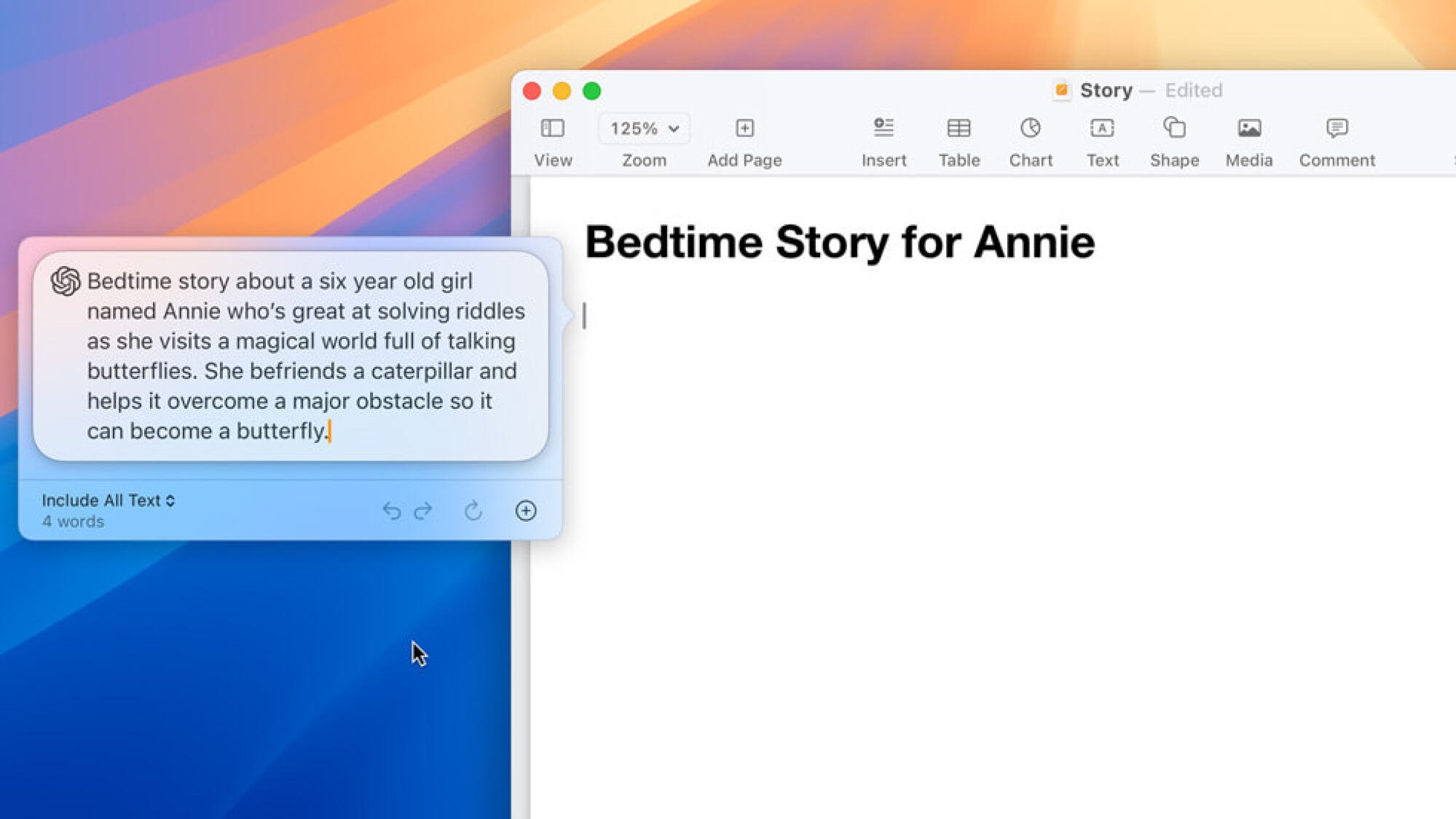

Even as Google is telling people to use “squat plugs,” Apple apparently feels confident enough in its models that it’s trusting them to help you be creative. Enter Rewrite, Image Playground, and Genmoji. Across compatible first-party and even third-party apps, these will allow you to create content using both Apple’s own models, and in some cases, ChatGPT.

Rewrite is the most familiar of these. Here, Apple is promising system-level AI help with text “nearly everywhere” you write, including in Notes, Safari, Pages and more via developer SDKs. From a right-click style menu on highlighted text, users will be able to give Apple Intelligence a custom prompt, or select from a number of pre-selected tones, and the AI will then rewrite the text accordingly.

Not into having AI change your text? It’ll also be able to proofread it to point out errors, summarize it (useful if you’re reading rather than writing), or reformat it into a table or list.

It’s similar to Chrome’s new ability to rewrite text on a right-click, but with way more options and supposedly available across many more apps. It’s also more accessible than Copilot, which lives in a separate menu, siloed away from the rest of Windows.

You’ll also be able to generate text from scratch, although Apple will lean on ChatGPT for this.

Credit: Apple

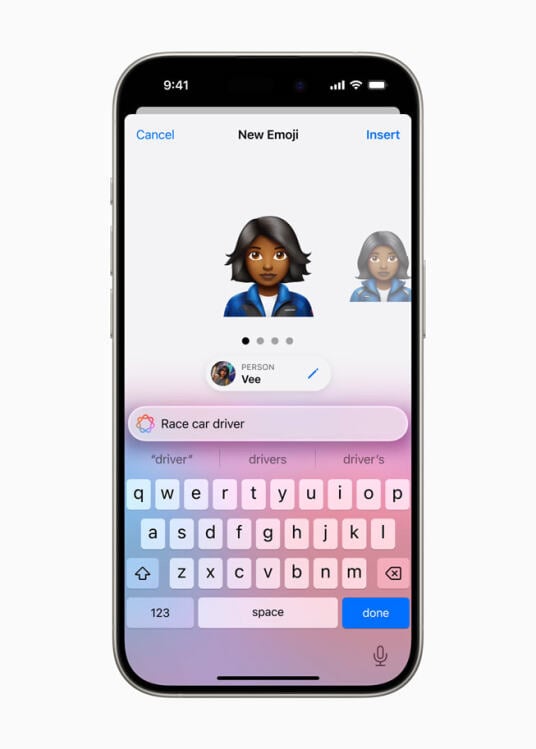

Image Playground and Genmoji are where things get more novel. Instead of having to go to a specific website like Dall-E or Gemini, Apple devices will now have image generation baked right into the operating system.

Available as its own app, baked into Messages, or integrated into other compatible apps via an SDK, Image Playground looks like your typical AI art generator, but powered by the same type of contextual analysis as Siri. For instance, you could give it a prompt, tell Image Playground to incorporate someone from your contacts list into it, and get art with a caricature of that person.

Again, Apple’s putting a lot of faith in its AI here. Say I send someone an image made with Image Playground and it hasn’t necessarily been flattering in how it’s depicted them: Yikes.

Credit: Apple

That said, it seems like there might be guardrails on the experience. Apple’s marketing language is a bit vague as to what the limits are here, but even with a prompt box prominently displayed in example, Apple is consistently telling us that we’ll have to “choose from a range of concepts” including “themes, costumes, accessories, and places.” It’s possible Apple won’t let users generate controversial images, an issue Bing and Meta have previously contended with.

But let’s say you don’t want a full image with lots of detail anyway. Apple’s also introducing Genmoji, which are similar to Meta’s AI stickers. Here, you’ll be able to give Apple’s AI a prompt and get back custom emoji done up in a similar style to Unicode’s official options. Again, these can include cartoon representations of people from your contacts list, but like emoji, they can also be added inline to messages or shared as a sticker react. Again, we don’t know the limits of what Apple will allow here.

Credit: Apple

We’ll have to wait until Apple’s AI images drop to properly see how well they compete against existing options, but perhaps the most interesting thing here is the ability to naturally generate images in existing apps. While Apple promises this will extend beyond Notes, one example the company showed had it selecting a sketch in Notes and generating a full piece of art based on it. Another had the AI simply generate a brand new image in Notes based on surrounding text.

That convenience, especially as AI remains split across dozens of sites and services, is sure to be a big selling point here.

Apple is promising private, on-device AI

Apple hasn’t been entirely forthcoming about the training materials for its AI, but something the company did push was its privacy.

Recently, moves from Meta and Adobe have raised concerns about AI’s access to its users data. Apple wants to put any such worries about its own AI to bed right away.

According to Apple, any data accessed by its AI is never stored and used only for requests. Further, Apple is making its servers’ code accessible to “independent experts” for review. But, at the same time, the company is looking to reduce the amount of times you have to access the cloud as much as possible.

Enter the A17 Pro chip (introduced in the iPhone 15 Pro and Pro Max) and the M-series of chips (used in iPads and Macs starting from 2020). Devices with these chips all have access to neural engines that Apple says will allow them to complete “many” requests on-device, without your information ever leaving your phone.

How exactly the split between on-device and on-cloud tasks will be handled is still up in the air, but Apple says that Apple Intelligence itself will be able to determine which requests your device is powerful enough to handle on its own and which will need help from servers before it decides where to send them.

While that’s still a promise, this would be a huge win for Apple, with competing features like Magic Editor and Gemini still requiring constant internet connections.

When can I try Apple Intelligence?

Apple didn’t give any specific dates on when Apple Intelligence will go live, instead giving viewers two windows to look forward to.

First, the company said Apple Intelligence will be “available to try out in U.S. English this summer,” although given what it said next, that’s likely to be a limited demo.

That’s because the

full beta of Apple Intelligence is set for this fall

, meaning it’ll likely come after the full releases of iOS 18, iPadOS 18, and macOS 15 via an update.

Perhaps the biggest hurdle Apple has to overcome with its AI, beyond making good on its security promises and ensuring its content generation doesn’t ruffle any feathers, is availability. While the promise of having most AI on-device is great for privacy and even for situations where internet connectivity is limited, it does have a caveat: Apple’s announcement for its AI only mentions it coming to iPhone 15 Pro, iPhone 15 Pro Max, and iPad or Macs with an M1 chip or later. Similarly, Siri and device language must be set to U.S. English to begin.